Taming Concurrent AI Inference and High-Resolution HMI on the ESP32-S3

The embedded systems landscape is undergoing a fascinating transformation. We're increasingly aspiring to imbue compact devices with capabilities once reserved for servers or high-end processors: fluid, detailed graphical user interfaces (HMIs) coupled with real-time, local Artificial Intelligence (AI) decision-making. Microcontrollers (MCUs) like Espressif's ESP32-S3 seem to open the door, boasting respectable dual-core performance, robust connectivity (Wi-Fi & Bluetooth), and even dedicated AI acceleration instructions. However, the practical engineering challenges surface quickly when we attempt to genuinely combine an application requiring continuous AI inference (like facial recognition or voice command detection) with a complex GUI demanding smooth rendering on an 800x480 touchscreen, all running on the ESP32-S3. This is far more than simple feature addition; it's an intricate dance dictated by finite resources – compute power, memory bandwidth, and power consumption. Let's delve into the critical hurdles in this resource management ballet.

The First Core Challenge: Memory Bandwidth – The Unseen Bottleneck

Driving an 800x480 display at 16 bits per pixel (RGB565) requires approximately 750KB just for the framebuffer – the memory holding the pixel data for a single screen image. For the ESP32-S3, typically equipped with 512KB of on-chip SRAM, this immediately necessitates the use of external PSRAM (Pseudo-Static RAM). While PSRAM offers larger capacity, its access speed and bandwidth lag significantly behind on-chip SRAM. Herein lies a crucial bottleneck.

Imagine the scenario: a GUI rendering engine (like LVGL) is actively updating screen regions. It needs to frequently read existing pixel data from PSRAM, compute new values, write them back, and finally transfer the updated blocks via the display interface (be it SPI or a faster parallel RGB interface) to the screen controller. This process is inherently memory-bandwidth intensive. Simultaneously, our AI inference task (running, say, a TensorFlow Lite Micro model) also needs to fetch model weights from PSRAM, read input data (perhaps from a camera or microphone buffer via DMA), and store intermediate activation values back into PSRAM during computation.

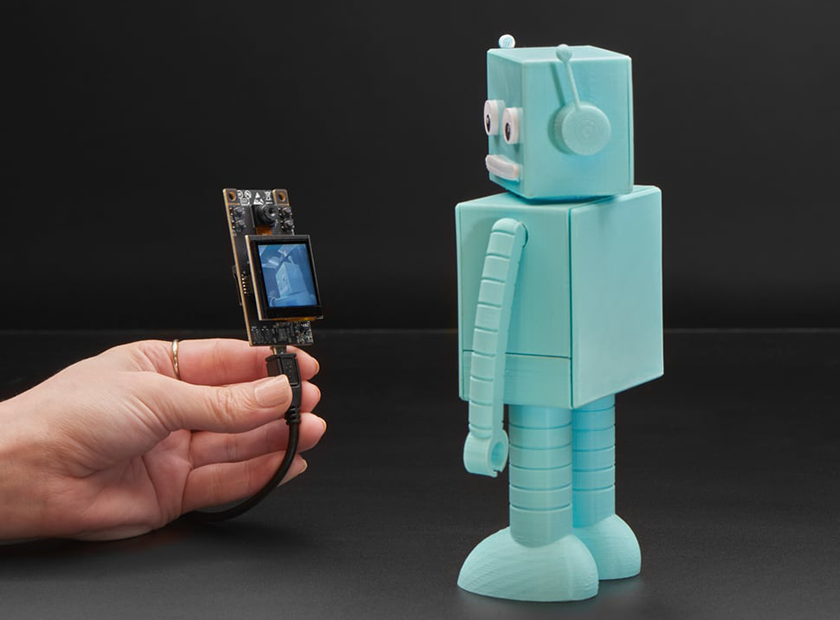

ESP32-S3 Listening

At this point, the memory bus becomes a point of fierce contention. As detailed in various community deep-dives and technical blogs analyzing ESP32/PSRAM performance (often exploring SPI PSRAM timings and effective bandwidth), when CPU cores, DMA controllers (handling peripheral data and display transfers) all attempt concurrent PSRAM access, the actual available bandwidth is diluted, and access latency increases. The consequence? GUI rendering might stutter or drop frames while waiting for memory access. Similarly, AI inference speed could plummet due to delays in data retrieval, potentially compromising real-time requirements. Effectively scheduling memory access, optimizing data locality, and perhaps even employing sophisticated caching strategies (despite limited MCU cache capabilities) emerges as a thorny system-level optimization problem. This demands more than just writing code; it requires a deep understanding of hardware characteristics and low-level system interactions.

The Second Core Challenge: Compute Allocation & Real-Time Guarantees – The Dual-Core Gambit

The ESP32-S3's dual-core LX7 architecture presents a potential solution: dedicate one core to the highly real-time sensitive tasks of HMI rendering and touch response, while assigning the computationally intensive, potentially more latency-tolerant AI inference tasks to the other core. While appealing in theory, precisely partitioning these tasks and managing the necessary synchronization and communication between them presents its own set of difficulties.

For instance, when the AI model (running on Core B) detects a specific event (e.g., recognizing a particular gesture), it must notify the GUI (running on Core A) to update interface elements. This inter-core communication necessitates using FreeRTOS queues, semaphores, or other synchronization primitives. If this communication pathway or the GUI's subsequent handling is inefficient, the user perceives lag. Conversely, excessively complex GUI rendering consuming significant time on Core A, especially if it frequently accesses shared resources like PSRAM, can indirectly impede the execution efficiency of the AI task on Core B.

Furthermore, AI inference workloads are often non-uniform, with varying computational intensity across different stages of a neural network pass. How can task priorities be dynamically adjusted, perhaps even allowing the AI task to momentarily "borrow" resources typically reserved for the GUI (like peak memory bandwidth), without shattering the GUI's fundamental responsiveness? This delves into complex Real-Time Operating System (RTOS) scheduling strategies and meticulous performance profiling. Discussions within relevant developer forums (like ESP-IDF's GitHub Discussions or specialized embedded communities) are rife with debates on optimizing FreeRTOS configurations for such mixed workloads, avoiding priority inversion, and balancing throughput versus latency, highlighting this as a common and intricate tuning challenge.

Inside Overview

Integrated Platforms: End of the Road, or a New Starting Line?

Given these intricate hardware-software co-design challenges, building such a system from scratch – selecting the screen, designing the PCB, writing low-level drivers – undeniably consumes significant effort. This makes integrated HMI platforms, such as the Elecrow CrowPanel Advance 5.0", quite appealing. They bundle the ESP32-S3 (often with PSRAM integrated), a high-resolution (e.g., 800x480 IPS) touchscreen, and potentially a bandwidth-friendly RGB display interface, all onto a single board, usually providing basic Board Support Packages (BSPs) and drivers.

But does this eliminate the challenges? Not entirely. These platforms primarily address the complexities of hardware integration and fundamental driver bring-up, effectively lowering the barrier to entry. They liberate developers from the initial struggle of merely "making the hardware work." However, the deeper system-level optimization hurdles we've discussed – the memory bandwidth bottlenecks, compute allocation conflicts, and real-time guarantees – remain squarely in the developer's path. In fact, having a stable, performant hardware base allows developers to confront these more profound software and system architecture challenges earlier and more directly. Integrated platforms, therefore, aren't the finish line but rather a new starting line, enabling developers to more rapidly reach the stage of 'hardcore' optimization. The question shifts from "Will my screen driver work?" to "Given this hardware, how must I architect my software to make my AI algorithms and LVGL interface dance harmoniously, squeezing out every last drop of performance?"

Conclusion: Charting Unexplored Territory

Marrying demanding AI inference with a high-resolution, fluid HMI on an MCU like the ESP32-S3 is a challenging yet immensely potential-rich endeavor. It requires developers to possess not only application-level expertise but also a deep grasp of underlying hardware traits, memory systems, RTOS scheduling intricacies, and the willingness to perform detailed system-level performance tuning. It transcends simple "feature implementation," becoming more akin to precision engineering within a resource-constrained environment.

Currently, there's no single "silver bullet" solution. The community's exploration is ongoing, with optimization techniques, novel software library designs, and even anticipation for future hardware capabilities constantly emerging. As developers, we are active participants in this exploration. Each successful attempt pushes the boundaries of what's possible in embedded intelligent interaction.