How to Run DeepSeek R1 on a Raspberry Pi 5: Step-by-Step Guide

How to Run DeepSeek R1 on a Raspberry Pi 5: Step-by-Step Guide

DeepSeek has recently become a hot topic on the stage. As a powerful open-source large language model designed for various AI applications, from natural language processing to content generation, it comes in multiple model sizes, making it adaptable to different computing environments.

While DeepSeek-R1 is typically run on high-performance hardware, it can also be deployed on a Raspberry Pi 5 with proper optimization. This guide will walk you through setting up a compatible environment, installing necessary tools, and running DeepSeek-R1 on the Raspberry Pi 5 using the Ollama platform. Whether you're looking for a lightweight model for quick responses or a more advanced version for better accuracy, this tutorial will help you get started.

Four Steps to Run Deepseek R1 on a Raspberry Pi 5

Step 1: Setting Up a Virtual Environment

To prevent conflicts with the system’s default settings, it’s best to create a virtual environment for model deployment. This ensures that any changes made for DeepSeek do not affect other applications.

Creating a Project Directory and Virtual Environment

Open a terminal and run the following commands

|

mkdir /home/pi/my_project cd /home/pi/my_project/ python -m venv /home/pi/my_project/ |

Activating the Virtual Environment

Once the virtual environment is created, activate it

| source /home/pi/my_project/bin/activate |

To deactivate

To deactivate the virtual environment, use:

| deactivate |

Make sure to perform all model setup steps within this virtual environment.

Step 2: Installing the Ollama Platform

DeepSeek-R1 can be run using the Ollama platform, which supports GGUF-format model files. If you choose a safetensors format model, you’ll need to convert it to GGUF using llama.cpp.

Checking System Compatibility

Before proceeding, confirm that your Raspberry Pi’s system is 64-bit by running the command of uname -m

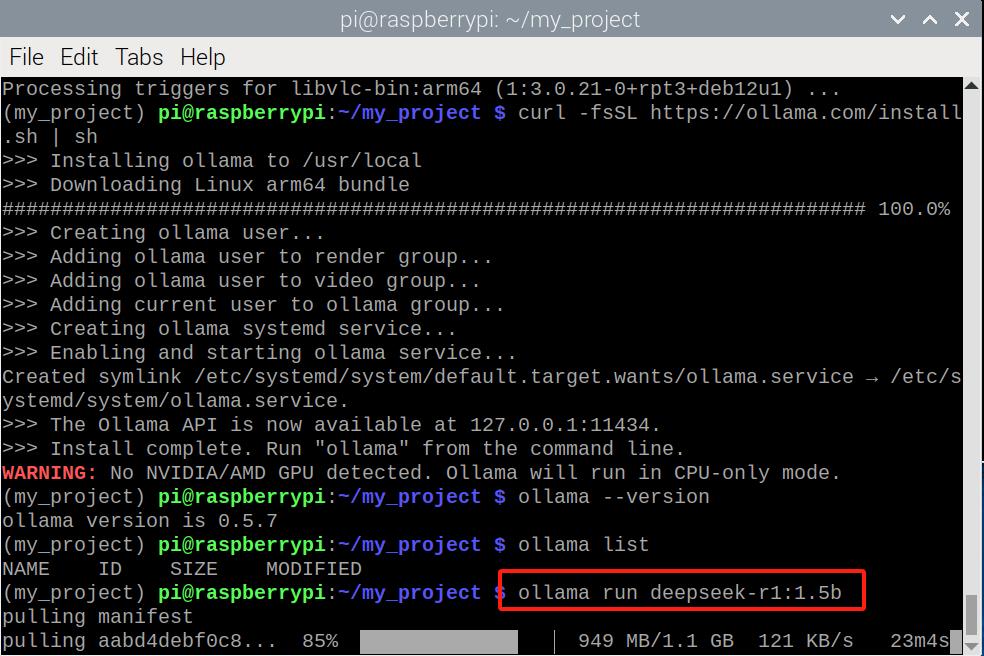

Installing Ollama

First, update your system:

|

sudo apt update sudo apt upgrade -y |

Then, install curl (required for downloading Ollama):

| sudo apt install curl |

Now, download and install the Ollama platform:

| curl -fsSL https://ollama.com/install.sh | sh |

Verifying the Installation

Check if Ollama was installed successfully:

| ollama –version |

Now that Ollama is ready, let’s proceed with downloading a DeepSeek-R1 model.

Step 3: Running DeepSeek-R1 on Ollama

There are two ways to get the DeepSeek-R1 model:

1. Download and run it directly from Ollama.

2. Download a model from HuggingFace, then import it into Ollama.

Regardless of the method, Ollama is required.

Method 1: Download and Run Directly from Ollama

Ollama offers pre-configured models, making it the easiest way to start.

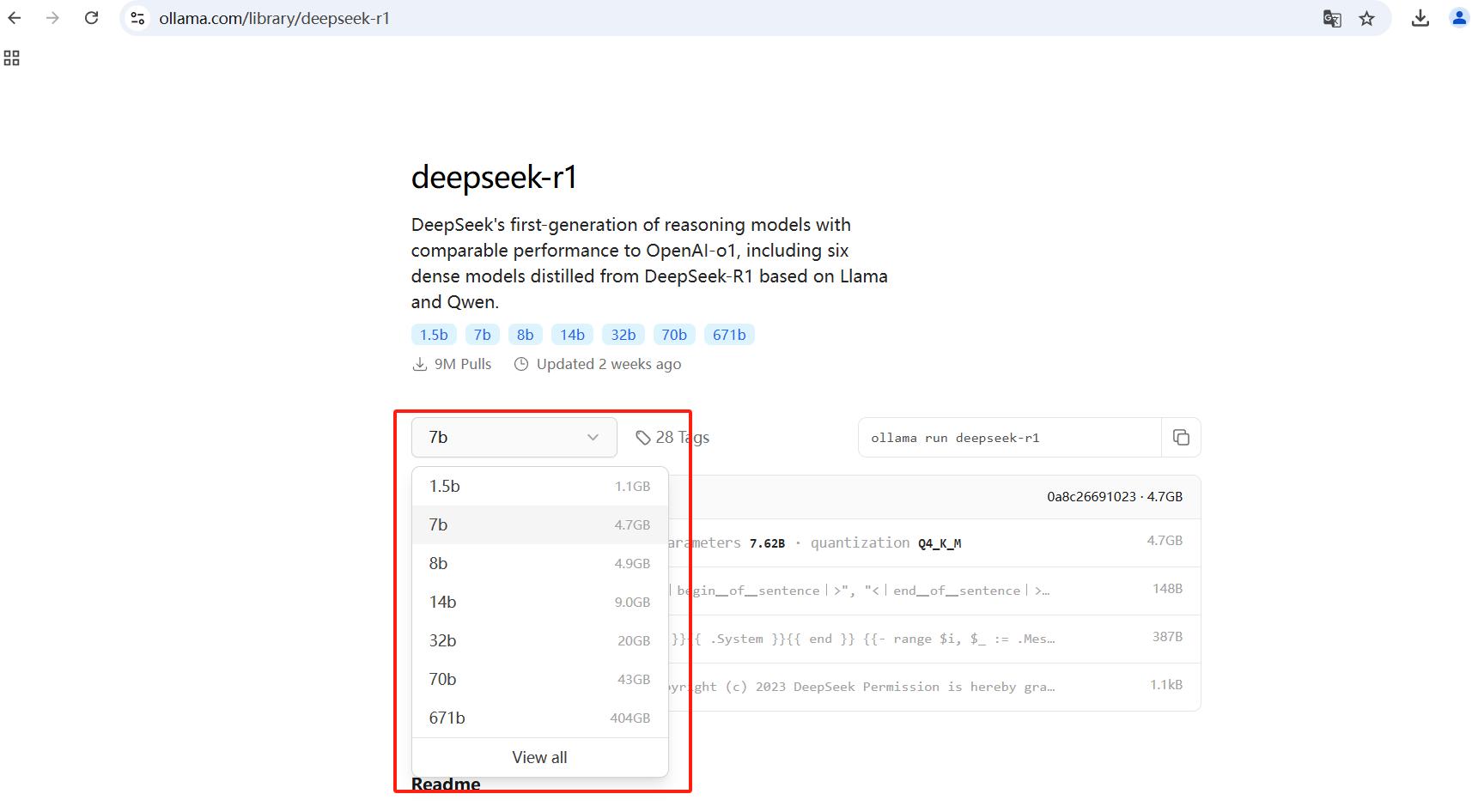

Search for DeepSeek-R1 on the Ollama website and find an appropriate model size.

To run a 1.5B model:

| ollama run deepseek-r1:1.5b |

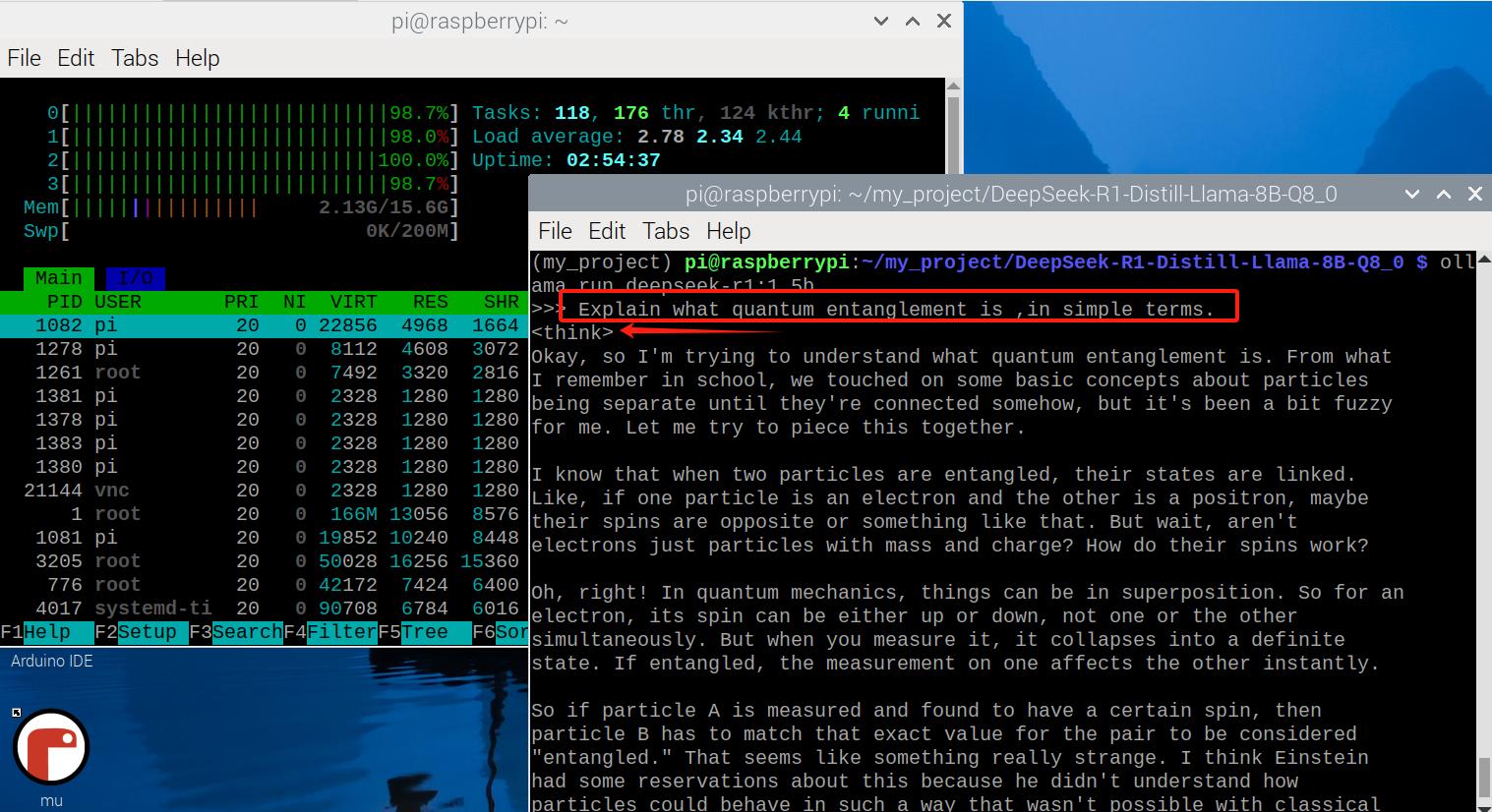

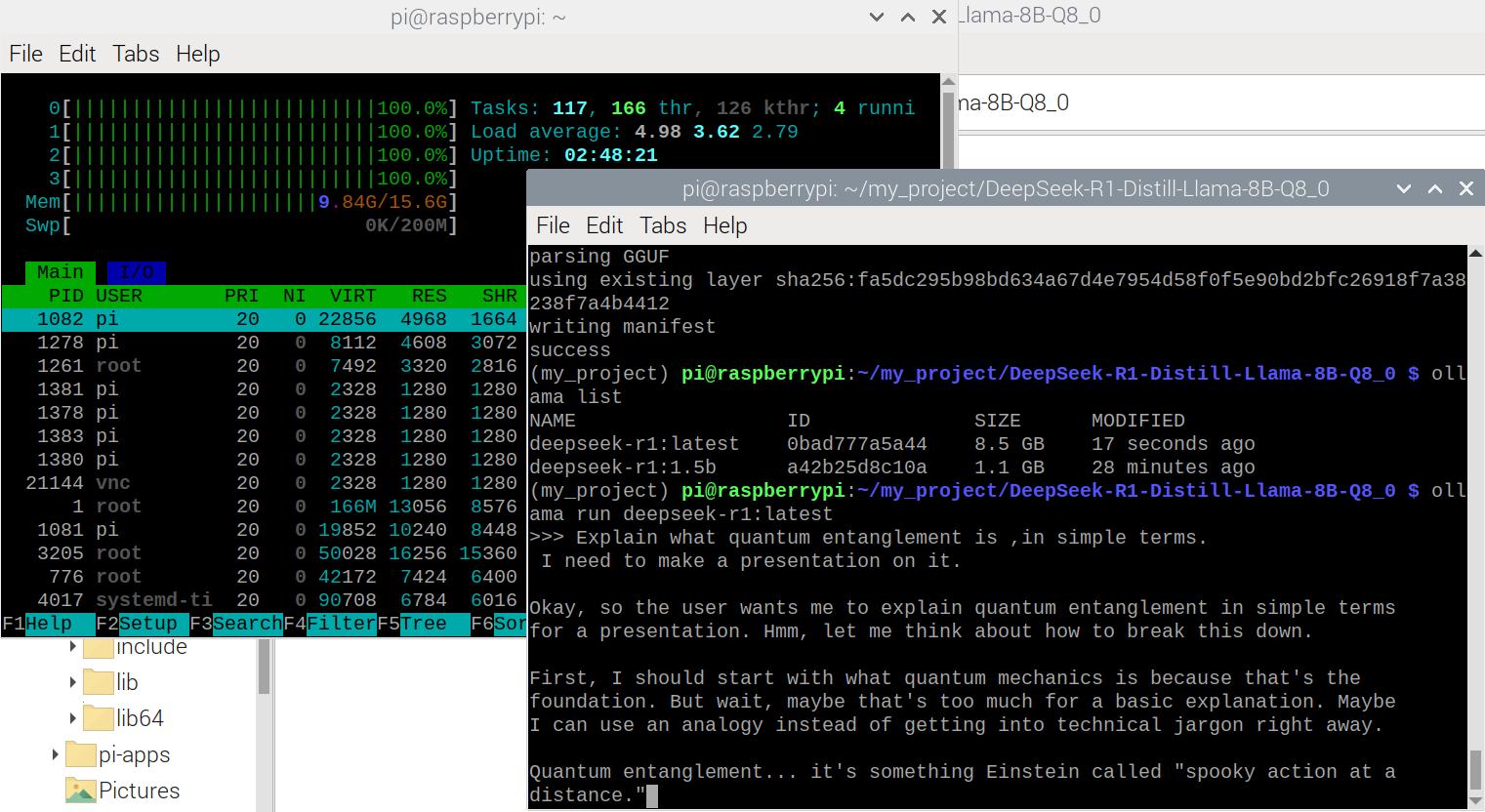

Ask the model a question, like "Explain quantum entanglement in simple terms." It will respond immediately while also displaying its thought process.

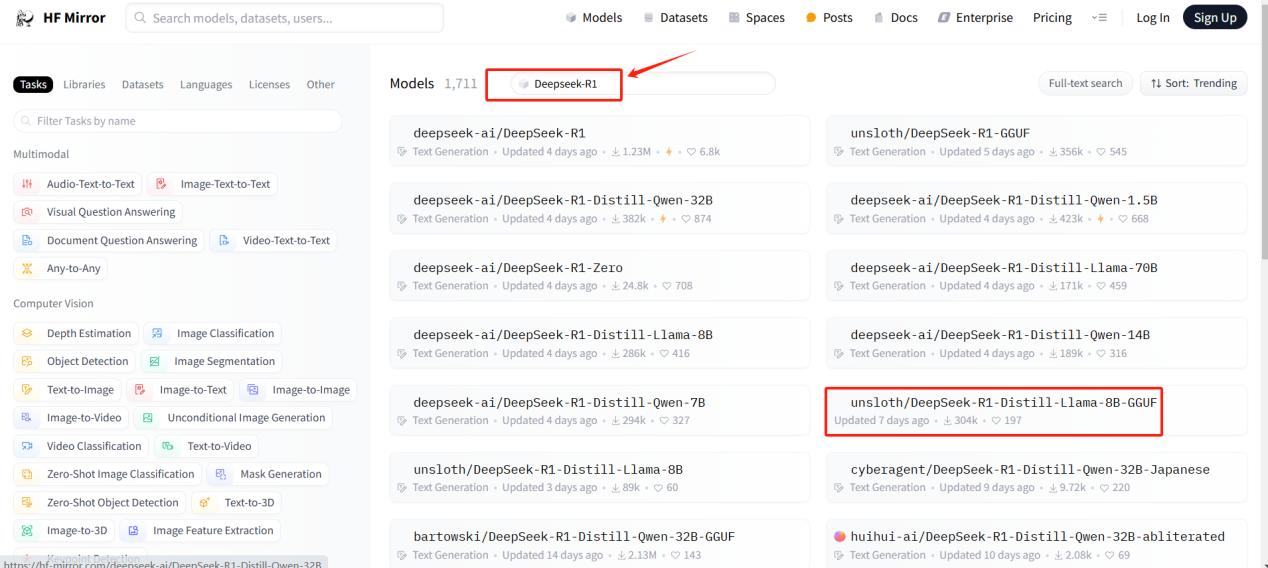

Method 2: Download a Model from HuggingFace

Downloading from HuggingFace allows you to select a specific model size. This is helpful because the Raspberry Pi 5 (even with 16GB RAM) cannot handle larger models efficiently.

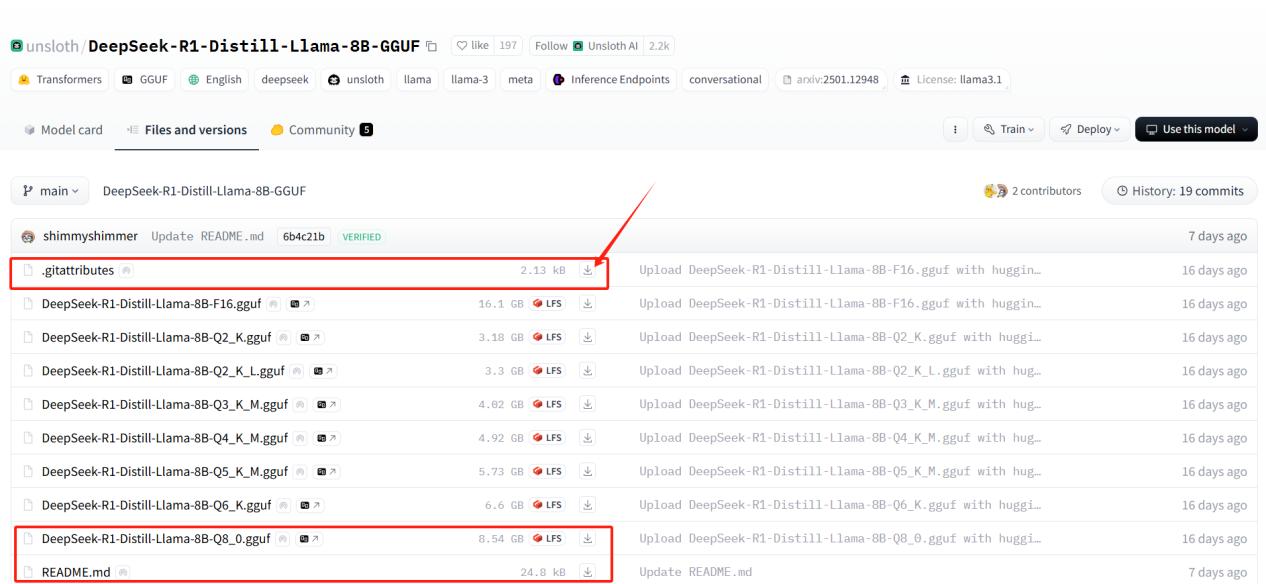

Choosing a Model from HuggingFace

1. Visit HuggingFace and search for DeepSeek-R1.

2. Select a smaller distilled version suitable for the Raspberry Pi 5.

3. For this guide, we’ll use DeepSeek-R1-Distill-Llama-8B-GGUF, which has 8 billion parameters and is optimized for performance.

4. Download the Q8_0 model file (~8.54GB), as it balances quality and efficiency.

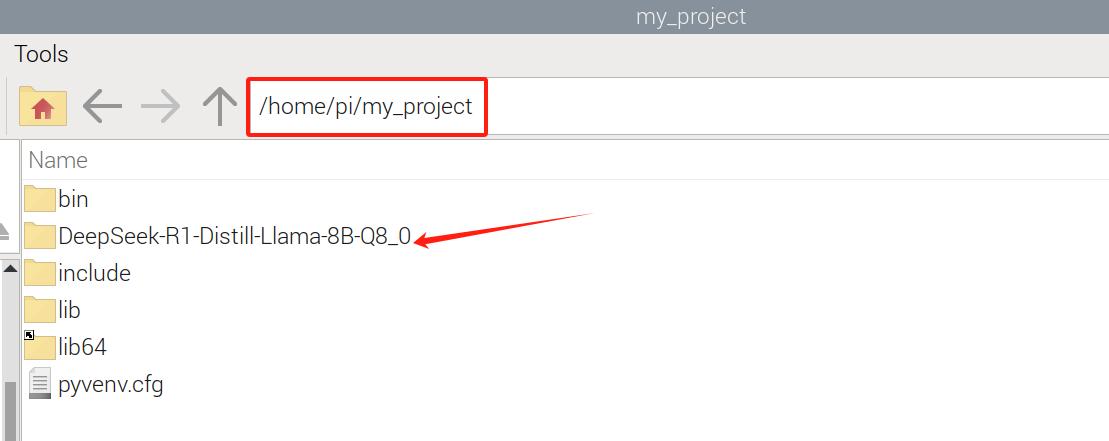

Transferring Model Files to Raspberry Pi

After downloading the three files, transfer them to your Raspberry Pi using FileZilla or another file transfer tool.

Step 4: Importing the GGUF Model into Ollama

Once the model files are on the Raspberry Pi, we need to import them into Ollama.

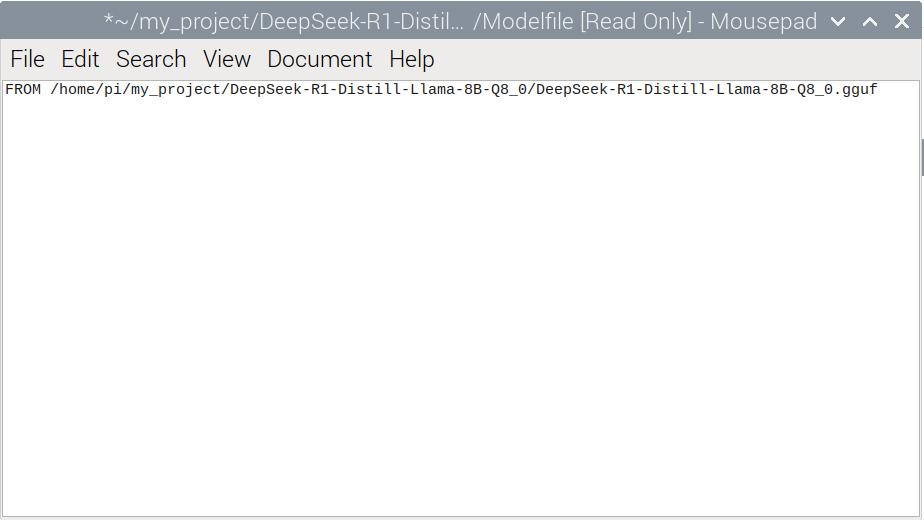

Creating a Modelfile

First, create a Modelfile in the model folder and grant it full permissions:

|

sudo touch /home/pi/my_project/DeepSeek-R1-Distill-Llama-8B-Q8_0/Modelfile sudo chmod 777 /home/pi/my_project/DeepSeek-R1-Distill-Llama-8B-Q8_0/Modelfile |

Adding Model Configuration

Open the Modelfile and add:

| FROM /home/pi/my_project/DeepSeek-R1-Distill-Llama-8B-Q8_0/DeepSeek-R1-Distill-Llama-8B-Q8_0.gguf |

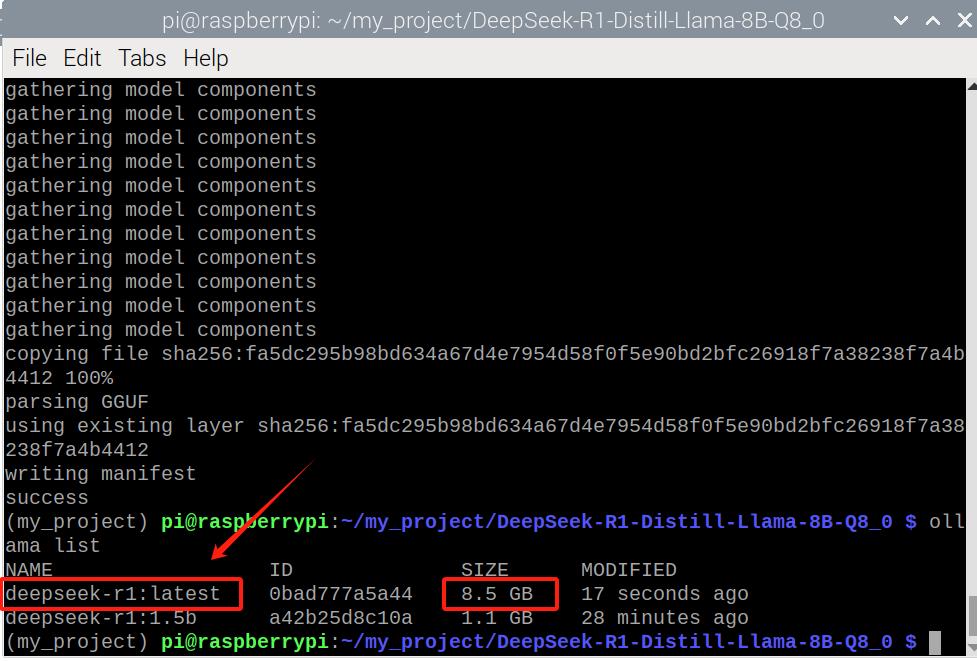

Now, create the model in Ollama:

| ollama create DeepSeek-R1 -f Modelfile |

Verify that the model is available:

| ollama list |

Now, start the model:

| ollama run deepseek-r1:latest |

Ask it a question and observe its response.

Comparison of Model Options

We have tested two versions of DeepSeek-R1:

1. DeepSeek-R1-1.5B (downloaded directly from Ollama).

2. DeepSeek-R1-Distill-Llama-8B (downloaded from HuggingFace).

|

Performance Differences |

||||

|

Model |

Size |

Response Quality |

Speed |

RAM Usage |

|

1.5B (Ollama) |

Small |

Decent |

Fast |

Low |

|

8B (HuggingFace) |

Larger |

Better |

Slower |

High |

If you prioritize faster responses, use DeepSeek-R1-1.5B. If you want higher-quality answers, use DeepSeek-R1-Distill-Llama-8B, but be mindful of RAM limitations.

Conclusion

By following this guide, you can successfully run DeepSeek-R1 on a Raspberry Pi 5 using the Ollama platform. If you want an easy setup, downloading directly from Ollama is best. However, if you need a specific model size, HuggingFace provides more flexibility.

Now, experiment with your model and see what it can do!

In this tutorial, we use the new CrowPi 2 with Raspberry Pi 5. CrowPi 2 is a portable Raspberry Pi-powered laptop with an IPS screen, a 2MP camera, a microphone, and built-in stereo speakers. It features a detachable magnetic keyboard and a compartment for a power bank or extra components, making it ideal for learning and working on the go.